Are you looking for the best SERP APIs? With so many SERP APIs available, it can be difficult to decide which one is right for your business. With the ever-changing landscape of search engine algorithms, companies need to stay up-to-date with the latest SERP APIs.

With the right SERP APIs, you can power your business with accurate SERP data and win the customers’ trust and reliability.

This blog post will provide an overview of the top SERP APIs on the market and offer advice on how to choose the best one for your needs. We’ll discuss the features and benefits of each option, their pricing structures, and support options, as well as the pros and cons of using them.

With the proper knowledge, you’ll be able to make an informed decision about which SERP API is right for you. By the end of this post, you’ll have a better understanding of which SERP API is the best fit for your business. Read on to learn more about the best SERP APIs of the year.

What is a SERP API?

A simple keyword search on the internet offers a wealth of information. It is critical to limit the search results to the URLs that contain relevant information depending on the search query. Ranking first in search engine results pages (SERPs) is every website developer’s desire because of the ease of discovery and related conversion rate.

Google and Bing are constantly updating their search engine optimization (SEO) bots and algorithms. As a result, it is critical to leverage SERP APIs to scrape data to remain relevant in web page results. SERPs are the pages returned by a search engine after entering a query into the search field.

APIs are software tools that allow you to scrape search engine results in real time, using a specific programming language to make requests and answers in a particular manner. These APIs enable you to assess, examine, track, and optimize the search engine visibility of your website.

- Data scraping in real-time

- Strong proxies that allow for increased security

- The most extensive integration with many programming languages.

- Handling large volumes of requests with precision

- Simple to personalize.

- In most APIs, responses can be organized in JSON, HTML, or CVS forms.

- Most collaborate with major search engines such as Google and Bing.

- The bulk of them provide a free trial period.

How do APIs work?

A SERP Tracking API delivers queries to the search engine and retrieves the results. These findings include the ranking of a site’s pages, header information, keywords and keyword recommendations, and other information necessary for SEO study.

A SERP API eliminates the need to insert data into your database manually. Instead, it collects massive volumes of SERP data for you, filters out the irrelevant data, and inserts the essential data directly into your system for speedy processing. Furthermore, SERP APIs automatically regularly extract data, allowing you to stay up to date with rapidly changing data, such as SEO rankings.

Why should you scrape SERP data?

Browsers are a gold mine of vital data for SEO specialists; hence, they are frequently subjected to web scraping. Scraping enables such specialists to collect data from these result pages, often known as SERPs, and export it to a more usable format. SERPs contain a significant amount of information that is useful for SEO chores, and a few of these details are briefly discussed below.

Keyword Data

There are categories for “people also search,” “Rich Snippets,” and “people also ask,” which contain keywords that you can use to create better SEO-based content that appeals to both Google and your audience. A search engine results tracking API will help your SEO efforts by providing the keywords that the top-ranking sites are targeting, allowing you to change your on-page content.

Ranking Data

The ultimate aim of SEO is ranking. To do this, content creators work hard to provide well-researched material. However, to assess whether their efforts are paying off or whether they are wasting their time, they must watch the ranking of their content for those keywords.

Other Types of Data

Your search may provide data other than keyword or ranking data, depending on the browser or search engine you are using. Google, for example, creates a division for local businesses, a Google Ads area, and maps.

The Best SERP APIs of 2026: A Comprehensive Guide

ScraperAPI

Scraper API is a solution for large-scale web scrapers who want to scrape the web without the worry of getting forbidden or blacklisted.

The Scraper API solution assists with proxy, browser, and CAPTCHA handling. You can get HTML from any web page with a single API call.

It is an excellent option for businesses wishing to extract Google SERP results for SEO operations and market intelligence on a tight budget. It’s easy to set up since all you have to do is send a GET request to the API endpoint with the API key and URL.

Scraper API has a database of about 40 million IP addresses and supports roughly 40 areas from which to obtain local data.

It is a low-cost supplier that provides a seven-day free trial of 5000 requests for new users. Scraper API offers a plan for any budget, with rates ranging from $29 per month for 250,000 Google pages to Enterprise Plans for hundreds of millions of Google pages per month.

Smartproxy

It enables you to obtain organized and full data from search engines with a single API call. You will receive a full-stack solution that includes a web scraper, data parser, and proxy network, resulting in JSON or HTML with a 100% success rate.

A bespoke tool will not operate properly if used alone; you will require a collection of high-quality proxies, which Smartproxy provides.

Smartproxy takes care of all the tedious labor, such as compiling lists of pricing, discounts, descriptions, availability, and names. By sitting in the back, it automates the process of market research, data collection, and rival strategy analysis.

It will take a few milliseconds to provide you with the data you want. The pricing starts at $100 per month and includes 35000 API queries, a proxy network, a web scraper, and a data parser. Not pleased? Request a complete refund within three days of registering.

Oxylabs

Oxylabs is a web scraping API that is well-known for its proxy management and support for headless browsers. The API runs JavaScript on websites and switches IP addresses for each web request, allowing you to see raw HTML without being restricted.

Among the items that may be retrieved are organic results, ads, local results, pertinent inquiries, and questions. A proxy server is a sort of server that sits between the user and the vast internet.

A proxy server’s objective is to offer the user security and anonymity. In terms of cost, they provide fair packages. Their most basic package is $99 per month.

SERPstack

Serpstack is a Google Search results in API that is real-time and accurate. This JSON REST API is trusted by some of the world’s top businesses and is lightning-fast and straightforward to use.

API layer, a software business located in London, UK, and Vienna, Austria, created and maintains the solution. The product grew from an internal necessity to keep track of search engine ranks in an automated method to become one of the most reputable SERP APIs accessible on the market.

API layer is the firm behind some of the world’s most popular API and SaaS applications, including Currencylayer, Invoicely, and Eversign. When an API request is made, the Serpstack API automatically gathers SERP data from search engines by employing a proxy network and proprietary scraping technologies.

The generated SERP data is open to the public and may be accessed by anybody. Furthermore, the Serpstack API supports almost all of the Google search result categories, including site results, picture results, video results, news results, shopping results, sponsored advertisements, queries, and much more.

Bright Data

Bright Data is a well-known and top proxy supplier in today’s proxy industry. They provide a wide range of special features and one-of-a-kind services. Bright Data, formerly known as Luminati, has been providing high-quality services to its customers for many years. Bright Data began as a proxy operator but has since grown to become not only a leader in that industry but also a data collection provider.

Bright Data’s next-generation SERP API provides an automated and configurable flow of data on a single dashboard, regardless of the size of the collection.

Data sets are tailored to your organization’s requirements, ranging from e-commerce trends and social network data to competitive intelligence and market research. You may focus on your main market by gaining automatic access to good data in your industry.

This SERP checker API is a pay-as-you-go service. The fee for 1,000 inquiries is $3.50. Subscriptions vary from $500 per month for 200,000 inquiries to $30,000 per month for 100 million queries.

SERPMaster

SERPMaster is a web scraper for Google. It falls under the genre of SERP APIs, which are specialized tools for gathering information from search engines, such as organic results, advertising, product information, and so on.

The primary function of a SERP API is to retrieve the search page and then extract the appropriate data from it. It takes care of proxy management, CAPTCHAs, and data processing for you. Your main concern should be to create the correct query and submit queries to the API. You should expect organized outcomes with a 100% success rate in return. As you may expect, Google scraping is a very competitive field.

SERPMaster is still relatively new on the market, and it has been attempting to carve out a niche for itself. Currently, its primary market is small to medium-sized firms that need to deal with Google. SERPMaster takes pride in being the most affordable Google SERP scraper, with assured data delivery for every request received.

Getting started with their API is simple since code samples for cURL, Python, PHP, and Node.js integrations, as well as performing queries from a web browser, are supplied.

Apify

Apify is a web scraping and automation tool that lets you create an API for any website. It includes a built-in proxy service that provides home and data center proxies for data extraction. The ApifyStore provides ready-to-use scraping tools for popular websites such as Instagram, Facebook, Twitter, and Google Maps, as well as custom scraping and extraction solutions of any size.

Apify provides a Google Search scraper that pulls a list of organic and sponsored results, as well as advertising, snaps packs, and other data.

It contains capabilities like the ability to search inside a specific location and extract custom data. You may also track the ranks of your rivals and analyze adverts for the keywords you want to target.

Zenserp

Zenserp makes it simple and easy for users to get and scrape search engine result pages. It is done in real time with no breaks. It comes as a simple code that can be integrated with any website to increase its SERP rating. CURL, Python, Node.js, and PHP code are accessible. It is highly scalable since the API always offers consumers consistent performance despite colossal usage.

Zenserp offers an intuitive API that returns moderation results in JSON format. The API combines human behavior and returns SERPS in the manner that a typical user would view. The API architecture is robust enough to retrieve SERPs in real time.

It also allows users to get search engine results depending on their geolocations. Zenserp is another popular option for scraping Google search results that were designed with “speed” in mind. Using a browser, CURL, Python, Node.js, or PHP, you can easily integrate this solution.

Proxycrawl

ProxyCrawl is an easy-to-use web scraping tool designed for both developers and non-developers. The platform supports all sorts of crawling projects and is used by leading firms like H&M, Shopify, Expedia, Pinterest, Oracle, Samsung, and others.

The powerful capabilities provided by the same allow firms to scrape and crawl websites without having to worry about global proxies. Users can utilize the crawler API to collect data for application interfaces quickly. In addition, the Crawler feature allows users to scrape web pages for large-scale projects that may require massive volumes of data.

ProxyCrawl’s structured data for businesses assist enterprises in efficiently streamlining their SEO technique. Users may also capture screenshots of web pages and save them in a handy location.

Furthermore, the platform provides a range of valuable lead management features that assist organizations with emails. They may also save all scraped data in the cloud and access it from a single location. In addition to collecting screenshots of the complete page in JPEG format, ProxyCrawl allows users to leverage back-connect proxy functions.

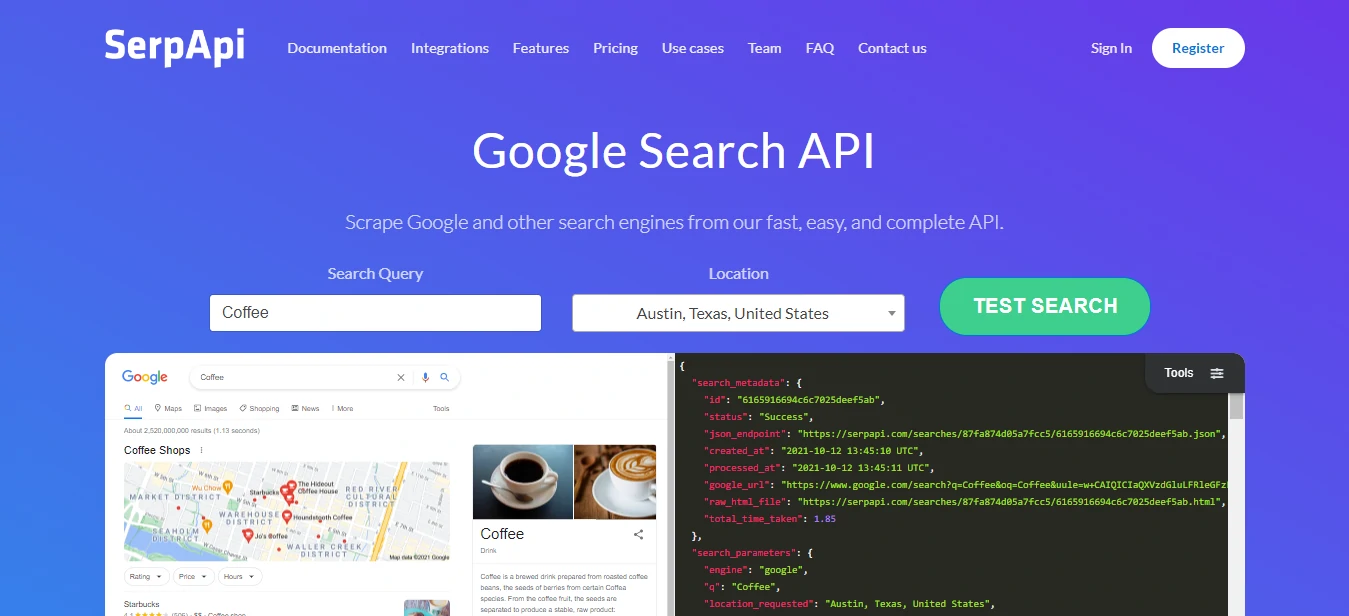

SerpApi

SerpAPI executes API requests in real-time, resulting in quick responses. The API supports accessing SERPs from specified locales via global proxy servers and can resolve CAPTCHAs. Most structured data will be correctly retrieved, including pricing, reviews, thumbnail photos, and snippets.

SerpAPI guarantees a throughput of up to 20% of your monthly searches per hour, with a search time of 2 to 5 seconds on average. SerpAPI has a success rate of 99.9% for searches conducted in the previous 30 days. SerpApi, which is used by IBM, Harvard University, and The HOTH, is packed with sophisticated capabilities that may make SERP scraping a breeze.

Using the SerpApi API, you may get real-time and accurate results, just like any other user. It allows you to receive search results from any location on the Earth. SERP API is suitable for anyone who wants to obtain data from search engines without worrying about data quality or speed. SERP API has put in a lot of work to create a search engine API that can reliably provide you with millions of search results per month with practically impeccable data quality.

FAQs

Register with your favorite SERP API. Log in to your account and request an authorization key, also known as an API access token. The key enables you to submit API queries. The Google SERP APIs allow you to extract information from SERPs such as organic results, paid results, featured snippets, and geographic location based on the endpoint request.

While both SEO and SERP APIs seek top ranks on search results, APIs offer distinct advantages. Real-time analytics let you react instantly to ranking shifts and track progress precisely. Plus, deeper competitor insights help you strategically position your site. Lastly, targeting specific queries by location and device is a breeze with APIs compared to SEO’s laborious methods. With SERP APIs, website optimization gains precision and agility.

A SERP API facilitates swift and precise data extraction, optimizing the collection of comprehensive search engine results. Nonetheless, its notable downside pertains to subscription costs and potential ongoing expenses. Although unmatched in speed and accuracy, manual analysis emerges as a budget-conscious alternative, albeit with potential limitations in data depth and timeliness. Deciding between the two methods hinges on balancing accuracy, speed, and budgetary considerations.

As previously stated, SERP APIs are internet scrapers. Previously, web scraping was considered a grey area, with some believing it was lawful. Meanwhile, HiQ Labs won a complaint filed by LinkedIn in a US court, as the judge ruled that their scraping of LinkedIn was legal because the data was readily accessible and not hidden behind a barrier or password. Scraping SERPs is legal because they are publicly available.

Leveraging SERP APIs provides a competitive advantage by swiftly accessing competitor data for informed SEO strategies. Real-time search engine ranking insights enable the identification of competitor weaknesses to improve search visibility. Furthermore, tracking organic search trends allows for aligning website content and design with current market demands. Embracing SERP APIs empowers businesses to enhance competitiveness and elevate their performance levels efficiently.

This depends on the specific provider, but standard options include Google, Bing, Yahoo, DuckDuckGo, and others.

- SEO monitoring and tracking

- Competitive analysis and research

- Content optimization based on search trends

- Location-based targeting for marketing campaigns

- Ad campaign optimization

- Supported search engines and features

- Pricing model and usage limits

- Documentation and support resources

- Provider’s reputation and reliability

Costs vary depending on factors like the volume of API requests, the depth of data required, and the features offered by the API provider. Some APIs offer free tiers with limited functionality, while others require subscription fees.

Yes, SERP APIs are valuable tools for optimizing SEO strategies by providing actionable insights into keyword performance, competitor analysis, and trends in search engine results.

Conclusion

SERP APIs are essential for any business that wants to stay competitive and informed about the latest search engine trends. There is a wide range of options available, each with its strengths and weaknesses.

When choosing the best SERP API for your business, consider factors such as cost, accuracy, performance, and scalability. By doing your research and asking the right questions, you can find the perfect SERP API to meet your needs. With the right SERP API in place, you can stay ahead of the competition and maximize your search engine visibility.