The world of artificial intelligence is in the middle of a historic boom. The global AI market, valued at a staggering $184.04 billion in 2024, is on a trajectory to exceed $826 billion by 2030. The machine learning segment, the engine driving much of this innovation, is projected to surge from approximately $94 billion in 2025 to over $329 billion by 2029, growing at an incredible compound annual growth rate (CAGR) of nearly 37%. This explosion is fueled by unprecedented investment, with U.S. private AI funding alone hitting $109.1 billion in 2024.

This AI gold rush, however, has created a critical bottleneck that threatens to stifle the very innovation it promises: a severe scarcity of computational power. For developers, startups, and researchers, the challenges are immense. High-performance Graphics Processing Units (GPUs), the specialized chips essential for training and running AI models, are in desperately short supply. The demand for cutting-edge hardware like NVIDIA’s H100 GPUs far outstrips manufacturing capacity, leading to long waits and frustrating procurement hurdles. This scarcity allows major cloud providers—the hyperscalers—to charge exorbitant prices, creating a formidable barrier to entry for anyone without a massive budget.

Beyond just cost and availability, the complexity of managing the required infrastructure—from specialized power and cooling to intricate software optimization—demands a level of expertise that many smaller teams simply do not possess. This leaves countless brilliant ideas stranded, unable to access the power needed to bring them to life.

This is the exact problem that Runpod was built to solve. Emerging as a specialized cloud GPU provider, Runpod directly confronts these pain points by aiming to democratize access to high-performance compute. It offers a more flexible, developer-friendly, and, most importantly, affordable compute platform designed to empower the builders, tinkerers, and innovators who are being priced out or left behind by the established giants.

Runpod Overview

Runpod is a cloud GPU computing platform founded in 2021 with the mission to make powerful GPUs accessible and affordable for AI/ML developers, researchers, and companies of all sizes. It was created by a small team of engineers and cloud computing enthusiasts focused on solving a key issue in AI development: GPU scalability without sky-high cloud bills.

Unlike traditional cloud providers, Runpod lets you spin up GPU-backed containers in seconds—on-demand, persistent, or serverless—without paying for idle time or unnecessary features. Their growing network includes 30+ data center regions across North America, Europe, and Asia.

Runpod is managed by RunPod Inc., a private U.S.-based company headquartered in Moorestown, New Jersey. Although the exact team size isn’t public, estimates suggest 50+ employees, with a focus on product engineering, AI infrastructure, and customer support.

The company has partnered with platforms like Replit, Civitai, and Runway and is trusted by thousands of developers for training LLMs, running inference APIs, and more. Whether you’re a solo developer fine-tuning a model or a startup deploying a production-scale AI service, Runpod offers performance and flexibility—without the typical cloud headaches.

| Attribute | Details |

|---|---|

| Product Name | Runpod |

| Official Website | https://runpod.io |

| Developers | RunPod Inc. |

| USPs | Per-second billing, FlashBoot (fast startup), no egress fees |

| Category | Cloud GPU Computing / AI Infrastructure |

| Integrations | Docker, Python SDK, REST API, CLI, CI/CD pipelines |

| Best For | ML/AI developers, startups, data scientists, LLM researchers |

| Support Options | Documentation, email support, Discord community |

| Documentation | docs.runpod.io |

| Company Headquarters | Moorestown, New Jersey, USA |

| Starting Price | $0.34/hour (for RTX 4090) |

| Alternatives | Lambda Labs, CoreWeave, AWS EC2, Google Cloud, TensorDock |

| Affiliate Program | Yes |

| Affiliate Commission | Approx. 20% (confirm via affiliate onboarding) |

| Money-back Guarantee | No formal policy; usage is prepaid per-second |

| Data Centers | 30+ Regions Globally |

| Launch Year | 2021 |

| Employee Count | Estimated 50+ |

Runpod Key Features

My Serverless GPU

Honestly, the Serverless GPU Compute felt like I finally had a team of invisible assistants. Instead of constantly worrying about setting up and maintaining servers for my machine learning models’ inference APIs, I just flicked a switch (metaphorically speaking, of course!). Runpod handled all the messy backend stuff, leaving me free to focus on the actual AI magic. It was like having a powerful engine that just works without needing to pop the hood.

Persistent Volumes

This one’s a real money-saver and a stress-reducer. Persistent Volumes mean I can save all my training progress and results, even if I have to shut down a session. It’s like having a trusty save point in a video game – I can pick up exactly where I left off without losing hours of work or having to re-run expensive computations. My time and cloud bill both send their thanks!

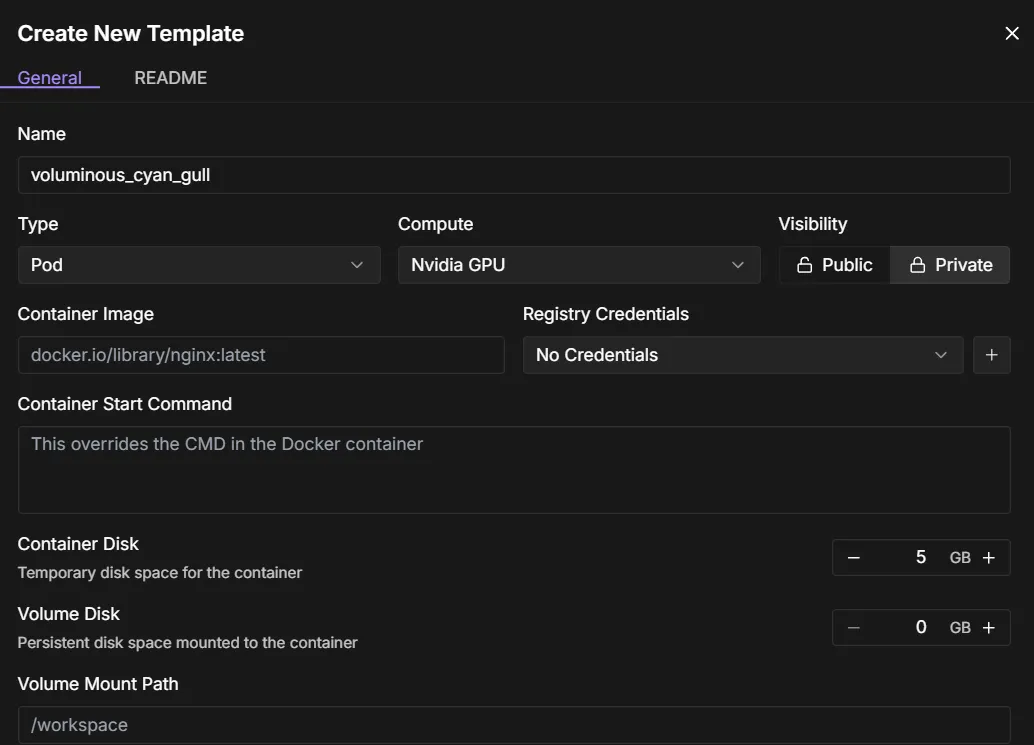

Templates

Remember those days of endless terminal commands just to get a project off the ground? Well, with Custom Templates, those days are thankfully behind me. I built templates for my favorite models, like LLaMA and Whisper, and now, starting a new project is literally a single click. It’s transformed my workflow from a fussy setup chore into a smooth, instant launch. Pure bliss!

Smart Shutdowns & Discord Pings

Now, this is where the smart automation kicks in! I’ve set up Auto-shutdown & Webhooks to be my personal financial watchdogs. Runpod can automatically shut down my instances when they’re not needed, and I even get little pings on Discord (my team’s chat app) to let me know. It’s brilliant for keeping costs in check without me constantly hovering over my usage. No more accidentally leaving a super-expensive GPU running all weekend!

Prebuilt ML Images

If you’ve ever wrestled with Docker configurations for machine learning, you know the pain. Runpod’s Prebuilt ML Images are a godsend. Whether I needed an environment for Stable Diffusion, Code LLMs, or just something general, they had ready-to-launch setups. It meant I could jump straight into coding and creating, skipping the tedious setup dance entirely.

CLI & SDK Access

For someone like me who loves to automate everything, CLI and SDK Access is like finding the ultimate cheat code. I can control my deployments and manage my resources directly through command-line tools or write Python scripts to handle things automatically. It gives me a level of precision and control that really streamlines my more complex workflows.

How to use Runpod?

Step 1: Create Your Runpod Account

- Visit runpod.io and click Sign Up.

- Use your email or Google account to register.

- Verify your email and set up 2FA for added security.

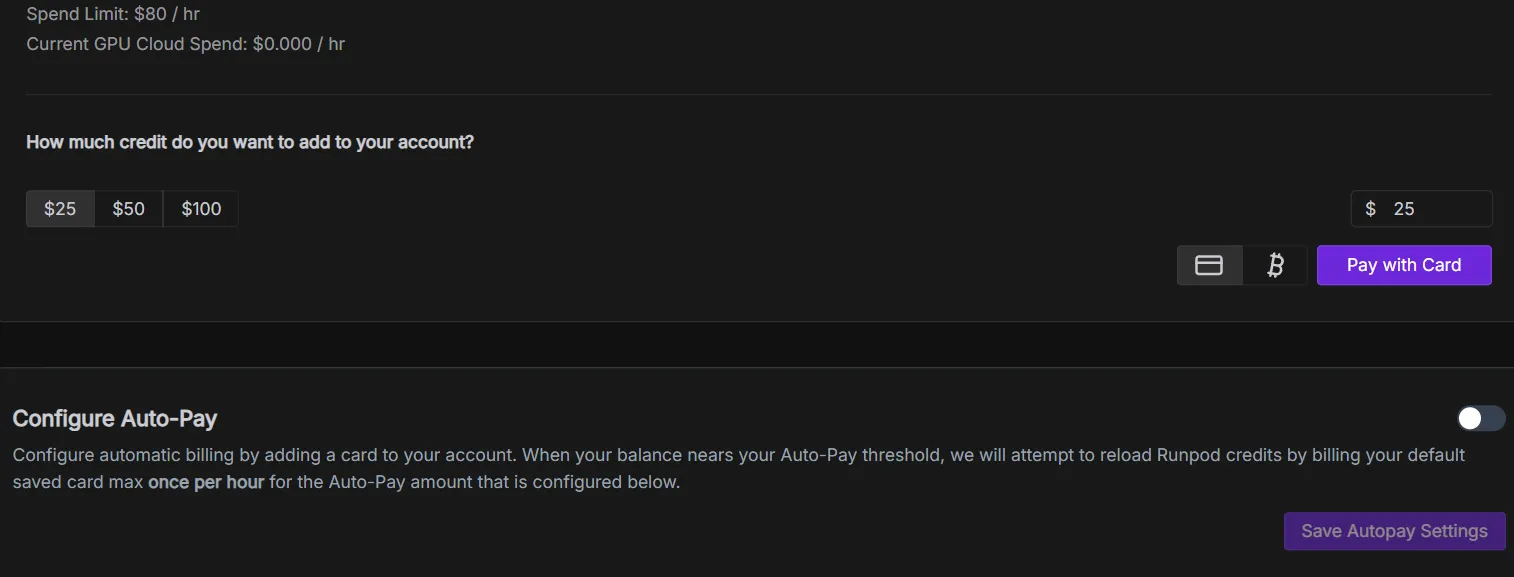

Step 2: Add Credits

- Go to your dashboard and click Billing.

- Add credits using a credit card, Stripe, or crypto (like Bitcoin).

- You’ll need credits to deploy GPU pods.

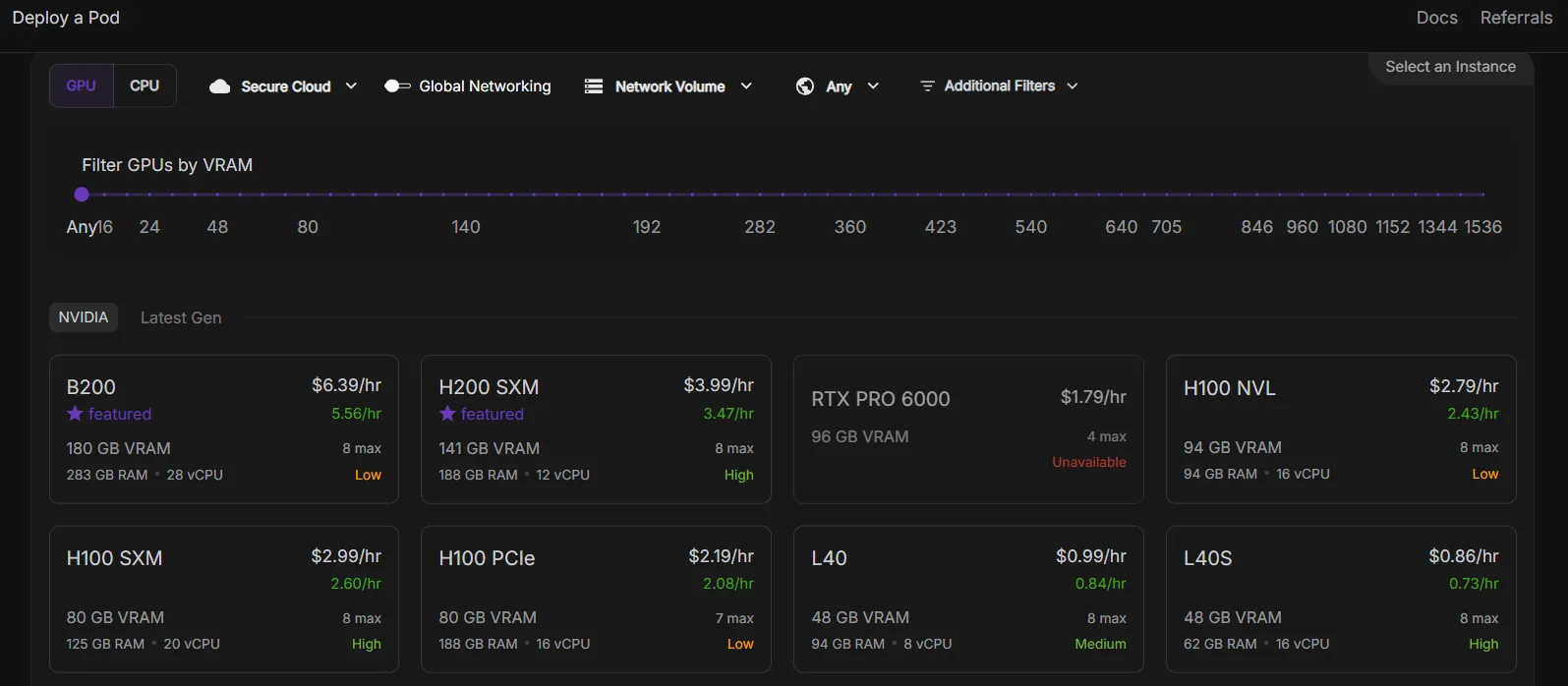

Step 3: Deploy a GPU Pod

- Navigate to the Pods section.

- Click Deploy and choose a GPU (e.g., A100, RTX 3090).

- Select a template (like Stable Diffusion, LLaMA, or Jupyter).

- Configure:

- Pod Name

- Storage Volume (optional but useful for saving work)

- Pricing Type: On-Demand or Spot

- Click Deploy On-Demand to launch your pod.

Step 4: Connect to Your Pod

- Once the pod is running, click Connect.

- Choose your interface:

- JupyterLab for notebooks

- VS Code Server for coding

- Gradio/Streamlit for web apps

Step 5: Run Your Workload

- Upload your code or use pre-installed environments.

- For ML models, you can:

- Clone from GitHub

- Install dependencies via terminal

- Use Hugging Face models directly

Step 6: Automate with Webhooks or CLI

- Use the Runpod CLI or REST API for automation.

- Set up auto-shutdown to save costs.

- Trigger alerts via Discord webhooks.

Step 7: Save or Terminate

- Stop the pod when done to avoid extra charges.

- Use persistent volumes to save your data.

- Terminate the pod if you no longer need it.

Runpod Use Cases

Runpod is built for speed, flexibility, and affordability—making it an excellent choice for a variety of modern AI and compute-heavy workloads. Whether you’re a solo developer, a scaling startup, or part of a research lab, Runpod offers the tools and infrastructure to power your ideas.

Here are the most common and effective use cases for Runpod in 2026:

1. Training & Fine-Tuning Large Language Models (LLMs)

Runpod is an excellent platform for training and fine-tuning large language models like LLaMA, Falcon, or Mistral. It offers access to powerful GPUs such as the A100, H100, and even the H200, which are ideal for handling the intense computational requirements of these models.

Because Runpod charges by the second and has no data egress fees, it significantly reduces training costs compared to traditional cloud providers. This makes it a smart option for developers and research teams building or customizing LLMs at scale.

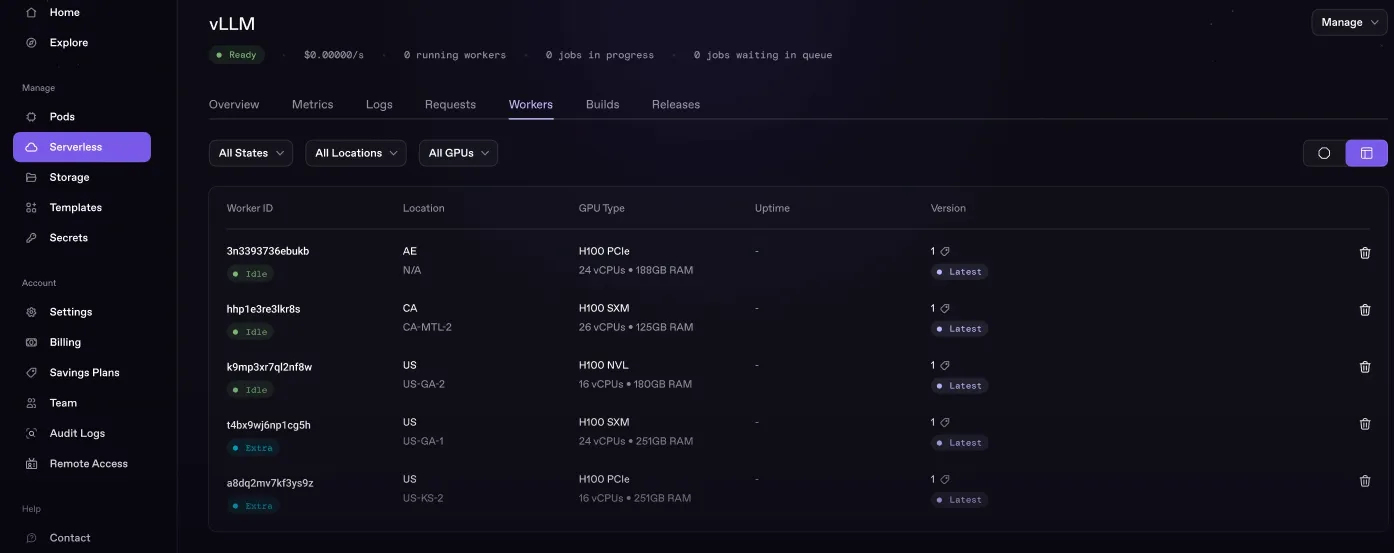

2. AI Inference & API Hosting

Runpod’s serverless GPU pods with FlashBoot technology allow developers to deploy AI inference APIs that start in under 200 milliseconds. This makes it suitable for real-time applications like AI chatbots, recommendation systems, summarization tools, and generative services.

The ability to auto-scale based on demand ensures you only pay when your API is in use, making it highly cost-effective for production deployments and bursty workloads.

3. Image & Video Generation (Stable Diffusion, SDXL, RunwayML)

With high-performance GPUs like the RTX 4090 and A6000, Runpod is a top choice for creative professionals working on Stable Diffusion, RunwayML, or SDXL projects. You can deploy pre-configured environments with just a few clicks or run custom versions with full control.

Artists and developers love using Runpod to generate high-resolution images and videos without investing in expensive local hardware. Templates for tools like Civitai, Automatic1111, and InvokeAI are widely supported.

4. Machine Learning & Deep Learning Experiments

If you’re experimenting with models in PyTorch, TensorFlow, or JAX, Runpod provides a powerful and affordable backend. Developers can quickly spin up pods, run experiments, test hyperparameters, and iterate—all without worrying about long-term cloud costs.

With custom Docker support and an intuitive CLI/API, setting up and managing training environments is simple. It’s perfect for hackathons, Kaggle competitions, or academic research.

5. Scientific Computing & Simulation

Runpod isn’t limited to AI—it’s also a great option for GPU-accelerated scientific computing tasks. You can use it to run simulations in physics, chemistry, genomics, and other scientific fields that rely heavily on parallel computation.

Multi-GPU clustering makes it suitable for distributed processing, while flexible billing ensures you only pay for what you use.

6. CI/CD and Dev Environments

Developers can use Runpod as a cloud-based development machine that supports GPU workloads. This is especially useful for AI-powered apps where local resources aren’t enough. Runpod integrates well with GitHub, GitLab, and Bitbucket, making it ideal for continuous integration and testing pipelines. Whether you’re testing ML pipelines, automating deployments, or debugging in a GPU-backed container, it provides a reliable environment for dev teams.

7. Secure & Isolated Cloud Workloads

For teams that need compliance and security, Runpod offers a Secure Cloud option. This provides tenant isolation, dedicated resources, and protection for sensitive data—ideal for industries like healthcare, finance, or government.

You get peace of mind knowing your workloads are safe, while still benefiting from high-performance compute infrastructure.

8. Model Deployment & Demo Hosting

Runpod allows developers to host machine learning demos and production-ready models using persistent or serverless GPU pods. It’s a great way to showcase your AI projects to clients, investors, or users without the complexity of setting up your own cloud infrastructure.

Whether you’re launching an MVP or creating a live interactive demo, Runpod gives you everything you need to go from idea to deployment fast.

Runpod Pricing

Runpod’s most disruptive feature is its pricing. The platform’s core philosophy is to deliver powerful GPU compute at a fraction of the cost of traditional cloud providers, with a transparent, pay-as-you-go model that eliminates financial surprises. Billing is granular—charged per minute for Pods and per second for Serverless—ensuring you only pay for what you use.

Crucially, Runpod does not charge for data ingress or egress. For anyone working with the massive datasets and models common in AI, this is a massive and often overlooked cost-saver compared to the hyperscalers, where data transfer fees can quickly balloon into a significant portion of the monthly bill.

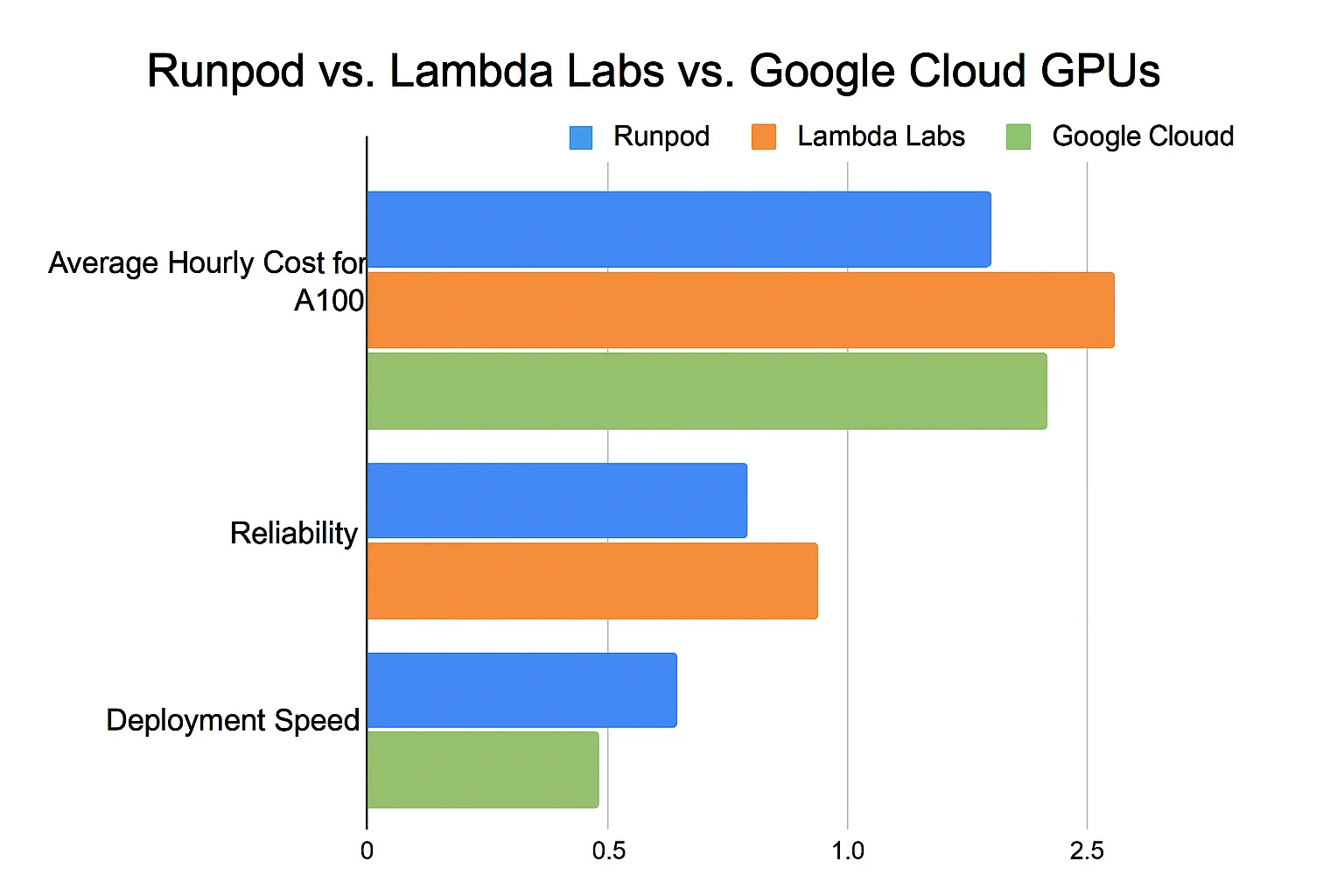

The cost savings when compared directly to major clouds are dramatic. Analysis shows that for identical high-performance GPUs, Runpod is consistently and significantly cheaper:

- NVIDIA H100 (80GB): On Runpod, this GPU costs around $2.79/hr. On Google Cloud Platform (GCP), the same instance is $11.06/hr, and on Amazon Web Services (AWS), it’s $12.29/hr. This represents a staggering 77% cost reduction compared to AWS.

- NVIDIA A100 (80GB): Runpod offers this at $1.19/hr, while GCP charges $3.67/hr and AWS charges $7.35/hr. This is a cost saving of up to 84% versus AWS.

- NVIDIA L40S (48GB): A popular choice for inference, this GPU costs $0.79/hr on Runpod compared to $2.00/hr on GCP, a 60% savings.

For users trying to decide on a plan, a good strategy is to start with Spot instances in the Community Cloud for learning and experimentation, as GPUs like the RTX 4090 offer an unbeatable price-to-performance ratio. For more serious work like production inference or long training runs, using On-Demand instances of GPUs like the L40S or A100 in the Secure Cloud provides the necessary reliability while still offering significant cost savings over hyperscalers.

| GPU Model | VRAM | System RAM | vCPUs | On-Demand Price/hr (Secure Cloud) |

| NVIDIA H200 SXM | 141 GB | 276 GB | 24 | $3.99 |

| NVIDIA H100 PCIe | 80 GB | 188 GB | 16 | $2.39 |

| NVIDIA H100 SXM | 80 GB | 125 GB | 20 | $2.69 |

| NVIDIA A100 PCIe | 80 GB | 117 GB | 8 | $1.64 |

| NVIDIA A100 SXM | 80 GB | 125 GB | 16 | $1.74 |

| NVIDIA L40S | 48 GB | 94 GB | 16 | $0.86 |

| NVIDIA RTX 6000 Ada | 48 GB | 167 GB | 10 | $0.77 |

| NVIDIA RTX A6000 | 48 GB | 50 GB | 9 | $0.49 |

| NVIDIA RTX 4090 | 24 GB | 41 GB | 6 | $0.69 |

| NVIDIA RTX 3090 | 24 GB | 125 GB | 16 | $0.46 |

| NVIDIA L4 | 24 GB | 50 GB | 12 | $0.43 |

Runpod Real User Reviews

Here are some real-user insights and reviews on Runpod, showcasing both the praise and the pain from various communities:

👍 Positive Feedback

From Trustpilot:

- A Verified user shared:

“The pod launched in under a minute… per‑hour pricing was both affordable and transparent… UI was excellent—clean, intuitive, and quick to configure.”

- Another praised:

“Affordable cloud GPU rentals, around $0.20–$0.50 per hour, with easy setup and preloaded frameworks… solid performance for model training.”

From G2:

- A small-business reviewer rated it 4.5/5, saying “RunPod is the best way to experiment and learn… integrated templates, user‑friendly UI/UX.”

👎 Constructive Criticism

From Reddit and Trustpilot:

- A user on r/StableDiffusion noted:

“Runpod also charges $8/month/20 Gb just to reserve that storage space… makes it very expensive to use a pod for long term storage.”

- Another wrote:

“Network speed is absolutely horrid… about 70‑100 kbps so takes ~5 hours to download the model that only took an hour to create.”

- One Redditor described reliability issues:

“IO is so slow… terminal connection will disconnect quite regularly… half the money you spent will be wasted time.”

- And a Trustpilot review highlighted billing surprises:

“If you stop a pod without deleting it, you will be charged for daily storage without a warning.”

Runpod earns solid marks for accessibility, affordability, and ease of deployment—perfect for quick tasks, experimentation, and lightweight production use. However, users should be aware of storage fees, IO limits, and intermittent connectivity issues, particularly for long-term or large-scale data workflows.

Alternatives

| Platform | Starting Price (Approx) | Best For | Key Features | Notable Drawbacks |

|---|---|---|---|---|

| Lambda Labs | ~$1.10/hr (A100) | Deep learning, research teams | High-performance GPUs, SSH access, Jupyter support | Limited automation tools |

| Vast.ai | ~$0.25/hr (3090) | Budget-conscious users | Decentralized GPU marketplace, flexible pricing | Inconsistent performance, UI quirks |

| Google Cloud GPUs | ~$2.48/hr (A100) | Enterprise-scale applications | Seamless GCP integration, autoscaling, global infra | Expensive, complex setup |

| CoreWeave | ~$0.85/hr (A6000) | ML workloads, rendering | Kubernetes-native, fast provisioning, ML-optimized | Limited documentation for beginners |

| Paperspace | ~$0.40/hr (RTX 4000) | Developers, educators | Gradient notebooks, automation, team collaboration | Slower support, fewer GPU options |

| Modal Labs | ~$1.50/hr (A100) | Serverless ML workflows | Fully serverless, Python-native, scalable APIs | Higher cost, limited GPU |

Runpod Customer Support

Alright, let’s talk about getting help when you’re using Runpod, because even the smoothest rides hit a bump now and then! The good news is, they’ve got a few ways to lend a hand, so you’re not just screaming into the void.

First up, there’s their Discord Community. This is honestly one of my favorite spots. It’s like a bustling coffee shop where everyone’s talking shop about AI. You can pop in, ask a question, and usually, someone from the community (or even a Runpod engineer!) will jump in with a quick answer. It’s awesome for quick troubleshooting or just swapping tips and tricks.

If you need something a bit more direct, they’ve got a Support Chat. Perfect for those moments when you just need a quick nudge in the right direction.

And of course, for those times when you prefer to write things out, there’s good old Email Support at [email protected]. Or, if you’re more into forms, you can always fill out a Support Ticket right on their website.

From what I’ve seen, they really try to cover all the bases – whether it’s their detailed documentation, handy FAQs, or these direct support lines. Plus, if you’re a startup in their special program, they roll out the red carpet with “Fast Track Support” (think priority access to their engineers on Slack) and even “white-glove migration” help. Pretty neat, right? It’s reassuring to know you’ve got backup when you’re building something cool.

What Makes Runpod Stand Out?

Runpod truly stands head and shoulders above its competitors in terms of transparency, speed, and flexibility. Unlike other offerings, Runpod shines with its per-second billing, zero data egress fees, and instant GPU access—features rarely found in traditional cloud platforms. The innovative FlashBoot technology allows serverless GPU pods to launch in under 200ms, giving it a serious edge for inference and production workloads.

Where others fall short with complex pricing models and slow provisioning, this product excels and sets a new standard in the industry. The intuitive dashboard, robust API, and wide range of GPU choices (including A100, H100, and RTX 4090) make Runpod a favorite among both solo developers and enterprise teams.

Boasting a myriad of features not found in other platforms—like clustered GPU deployments, affordable persistent pods, and secure isolated environments—Runpod offers exceptional value and is truly in a league of its own.

FAQs

So, what’s Runpod anyway?

Think of it as your personal GPU supercomputer, but in the cloud! It’s specifically for folks like us who are building cool AI and machine learning stuff.

How do you pay for it?

It’s super flexible – you only pay for what you use, down to the millisecond! No big upfront costs or wasted money on idle time. It’s like a pay-as-you-go phone plan for GPUs.

What kind of graphics cards can you get?

They’ve got a whole candy store of GPUs! From the more common ones like RTX cards to the serious heavy-hitters like NVIDIA’s A100s and H100s, and even AMD’s MI300X. You can pick the perfect muscle for your project.

Community Cloud vs. Secure Cloud – what’s the deal?

“Community Cloud” is usually cheaper, but occasionally, your work might get paused if someone else needs that exact resource for a “Secure Cloud” job. “Secure Cloud” means guaranteed uptime for your super important projects. It’s the difference between sharing a toy and having your own.

Can I use my own software setup?

Absolutely! If you’ve got your whole development environment neatly packaged in a Docker container, you can just plug it right into Runpod. Easy peasy.

Does it save your stuff?

Yep, it’s got persistent storage. So, all your datasets and model checkpoints are safe and sound, even if you shut down your instance and come back later. Plus, no extra fees for moving your data around!

Is it good for real-time AI?

Oh, totally! They’ve got tricks like “Active Workers” to prevent those annoying delays when your app first starts, and “serverless” options that automatically scale up globally. Perfect for when your AI needs to be lightning-fast.

Is there a free version?

Not a traditional “free tier,” but because they bill by the millisecond, even tiny tests or experiments cost next to nothing. You really only pay for the actual compute time.

How does it handle growth?

Runpod’s got this awesome auto-scaling feature. If your project suddenly gets popular (or you just need to crunch a lot of data), it can spin up thousands of GPU workers in seconds. It’s like having an instant army of super-fast brains.

What do people usually use Runpod for?

Mostly, it’s for training and fine-tuning AI models, making predictions with those models, and tackling other heavy-duty computing tasks like rendering videos or running simulations. Basically, if it needs a serious GPU, Runpod’s probably on the job.

Conclusion

We’ve taken a deep dive—through live pricing checks, feature evaluations, and comparisons with major cloud players. Runpod stands out in 2026 as a highly flexible GPU cloud offering granular billing, global reach, rich tooling, and no hidden fees. It’s ideal for developers, startups, and enterprises looking for raw compute power with full control.

Our review was built on up-to-date pricing, hands-on feature research, and side-by-side analysis with AWS, Google Cloud, Lambda Labs, CoreWeave, TensorDock, and Replicate. While Runpod isn’t a fit for everyone—its pricing varies and lacks traditional money-back guarantees—it offers real advantages in cost transparency, startup agility, and scalability.

If you need GPU compute that’s pay-for-use, fast to start, and powerful enough for LLMs, Runpod is one of the top platforms to consider in 2026.

The Review

Runpod

Runpod is a fast, affordable cloud GPU platform offering per-second billing, zero egress fees, and instant deployment of powerful GPUs like A100 and RTX 4090. With FlashBoot for sub-200ms startups, intuitive UI, and scalable serverless pods, it’s a top choice for developers needing efficient AI compute on demand. Ask ChatGPT

PROS

- Per-Second Billing

- FlashBoot Technology

- 30+ Global Regions

- Supports Top GPUs

- Developer-Friendly Tools

- Prebuilt Templates

- No Egress Fees

- Scalable GPU Clusters

- Flexible for All Users

- S3-Compatible Storage

CONS

- Slow Network Speeds

- Storage Costs Add Up

- Occasional Instability

- Limited Customer Support

- No Refunds